A Short Recipe for Test-driven Architecture

This blog post about test-driven architecture is written by Marko Bjelac, the Lead Software Developer of Software Sauna. Software Sauna is a Finnish owned full-stack development company that brings you quality code from Croatia agilely and remotely. Read more about Sauna here.

Introduction

Here I describe an outside-in test-driven architecture, or test-driven development (TDD) like some like to call it, approach.“Recipe” is intentionally in the title as this is just one of many possible approaches to achieve an effective TDD workflow.

Introduction 2: What is a unit test?

You should write lots of unit tests, adhering to the Test Pyramid metaphor. However, a unit test doesn’t have to be a small test testing a small piece of code. It just has to be a test testing a small piece of behaviour. What is small and big behaviour depends on what level of abstraction are you on. This video has a good explanation.

In this recipe, you will see that I prefer two types of tests: acceptance tests (specifications) and micro-tests. Specifications test the whole system (one behaviour at a time) and micro-tests test small pieces of the system.

Since both are unit tests, having plenty of both is fine (although I tend to have more micro-tests than specifications).

The recipe for test-driven architecture

Important note: The steps below should be done per feature, not per system. Don’t do step 1 for all features, then step 2 for all features… First, do all steps for feature 1, then do all steps for feature 2, etc.

Step 1: Specify what the customer wants

Ingredients:

- You, the developer(s)

- Your customer (or a knowledgeable representative)

- A Tester would also be nice as they have a way of uncovering interesting ways of using software

“Specify” means:

- Talk to the customer

- Write a specification

Those two should be as close together in time as possible, ideally one after another, or even at the same time – with the customer actively involved in the writing of the specification.

Specification: A “developer” test – a piece of code executable within the test framework of your choice.

Try to make the test code readable to the customer with whom you’re communicating about the requirement. The test should read like a list of examples describing the customer using the software. You can use tools for that (Cucumber, Fitnesse), but modern languages have rich syntaxes and with a bit of tricks you can just use their built-in syntax to get the readability.

The specification will have two sets of interactions:

- Interacting with the system

- Interacting with the “test doubles” – code set up around the system to isolate it from the real world

Leave the test double setup for the next step, as the customer doesn’t care about that.

Other steps require only the developer(s).

Step 2: Encapsulate (if needed)

If your system just has an interface (an API or (G)UI) for its customers and doesn’t talk to anything else, you’re done!

Some simple games are like this – think about it: You can play Tetris without the need to get data from a service. Everything can be initialized in the source-code of the game itself. Until you want to save your score…

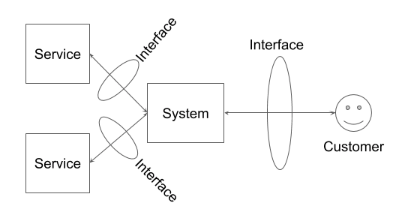

Most software, however, has additional components it talks to and those components are not your job to implement.

Here is a simple example:

Interfaces. Interfaces everywhere! But they will help us do this:

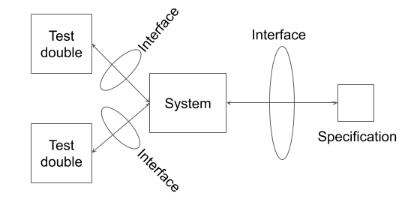

Everything is the same – we just swapped some stuff for other stuff:

- Customer is replaced by the Specification

- Services are replaced by Test doubles

The system we are testing doesn’t know about the swap because it’s decoupled from external stuff with the help of interfaces. You don’t even need to know the exact service which will be used – just its interface! That way you can defer the decision about which type of service to use (e.g. what database).

Notice: If you haven’t noticed already, we are already in the outer test-driven architecture cycle.

Run the specification. It should fail for expected reasons. If it does not, find out why not. The specification interacts with the system through an API. Go through each of the API endpoints used in the specification and implement them. How? Read on.

Step 3: Interactors

Every API endpoint is like a little recipe of its own. Get flour, crack eggs, mix.

Write down the recipe steps in the function which will be the implementation of the API endpoint. Each step should be another function. You can use local variables to pipe outputs from one step to other steps.

Take care of function names – they should be readable! Use words & terms from your customer’s domain. If the business deals with bags of cookies, there’s no need to introduce a BakedSweetsBucket, use a CookieBag instead.

Stub the recipe step functions like so:

function openCookieBag(bag: BagOfCookies): boolean {

throw new Error(“TODO”);

}

This pseudocode (resembling Typescript) describes a function that just throws an exception (or error). This is useful when you have to stop the cycle to do something else (like sleep). When you get back to the code, just run the specification – the error traces will tell you exactly what is left to do.

Break down the recipe into functions to get them as small as possible. If some functions are more complex, implement them as sub-interactors, etc.

Step 4: Logic

Having function stubs means we know the APIs of the functions. A function’s API is just its signature: what it takes as arguments, and what it should return. Typeless languages make this even more trivial, but more has to be checked with tests.

Test-drive each function:

- Write a small test for one behaviour of the function

- Run it: it should fail for an expected reason (only one). If it doesn’t, find out why.

- Implement just enough code in the function so the test passes.

- Write a small test for another behaviour of the function, etc.

Step 5: Adapters

Some recipe functions do not compute or decide anything but just serve as senders or receivers of data from the outside world. These are called adapters. They shouldn’t have any logic in them, just mapping outside-data to/from inside-data.

Some examples of adapters are:

- DAO classes (Data Access Object)

- HTTP clients

Adapters can also be test-driven, although this is harder to do. Manual testing against a real (production) service (or database) is also possible (just be sure to use test data or harmless data). This is fine since the adapter isn’t changing frequently. Be sure to re-test when the adapter or the service API changes. If you have automated tests for adapters, be sure to separate running them from other test runs, because they are typically much slower.

Finishing off

Repeat these steps for every feature, following the test-driven architecture cycle:

- Implement logic & adapters until the specification for that endpoint passes

- Write a specification for next endpoint

- Repeat

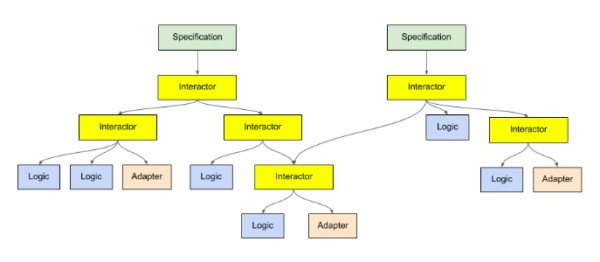

The resulting architecture should resemble this:

In the diagram, each Specification maps to one API endpoint, although that doesn’t have to be the case at all since it is likely that one feature will need multiple endpoints to deliver value to the customer.

Test-driven architecture test scopes

A final word about test scopes, which will hopefully explain how I got to this development pattern. As we see, there are only two types of tests using this pattern (excluding adapter tests for now):

- Specifications

- Micro-tests

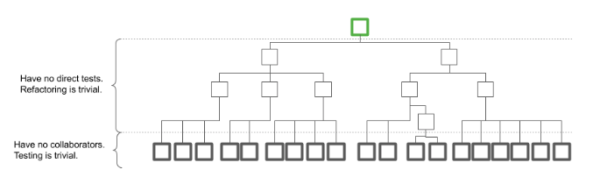

Specifications test the whole system, while micro-tests test only little bits of logic. None of the interactors have tests! Their correctness is indirectly tested by Specifications. This is fine because interactors should have only one execution path through them. The absence of tests makes them easy to change, move around, split or merge.

Conversely, all logic bits have tests. However, the bits are really small so it’s easy to write exhaustive tests for them, covering all possible cases which are maybe not covered by Specifications. Imagine a software system as a box which contains smaller boxes, which contain smaller boxes, … Each box can be covered by one or more tests:

Here the same thing is shown in a tree structure to better separate interactors from logic:

Writing tests for just any box is tricky because we don’t know when that box will have to be split into more boxes or merged with others to form a bigger box. When this happens, the box’s tests have to be changed as well. However, if we have an overarching specification which covers the business functionality of the system, we can split the boxes more and more to get the smallest nuggets of code possible. These will be much less likely to change (maybe we’ll just delete some or add new ones) *and* we can thoroughly test them.

This is how I came around this pattern of development – I can have my cake and eat it too, meaning:

- the bulk of the code has no tests so it can be freely rearranged

- multiple-execution path bits (logic) are thoroughly tested, so all conditions are covered

- entire business functionality is also tested, making sure the wiring of all components is correct

Talented is shaking up the general way of recruiting software professionals in Finland and worldwide. We help senior IT and digital Talents to find better fitting jobs and projects, and companies to build digital competences and employer brand.

Stay up to date on Finnish software industry insights and connect with both the hiring companies and the other techies who geek out on the same stuff you do – Join the Talented Developer Community!